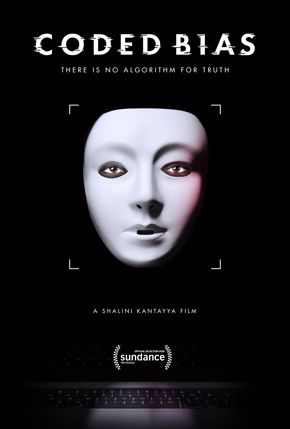

Virtual Cinema | “Coded Bias” Examines Inherent Biases in Artificial Intelligence November 17, 2020

In this time of collective reckoning, as we are flooded with recommendations to help understand the ways minorities have historically been marginalized and disenfranchised, it is equally important that we identify the processes by which these inequities continue to be rewritten in contemporary society.

Facial recognition software (FRS)—a technology that increasingly permeates our lives, from smartphone access to international travel—is a prime example. Because FRS is so pervasive, it can have terrible consequences.

Earlier this year, the New York Times reported on an African American man who spent a night in jail, based solely on an unsubstantiated FRS-generated indication. Although the suspect eventually was cleared (and the Detroit police department is now being sued), the victim continues to suffer consequences, ranging from his family’s PTSD to a bizarre digital afterlife, in which his photo is being used for fake political Twitter accounts.

Wrongfully Accused by an Algorithm | New York Times | June 24, 2020

In what may be the first known case of its kind, a faulty facial recognition match led to a Michigan man’s arrest for a crime he did not commit. “Is this you?” asked the detective. The photo was blurry, but it was clearly not Robert Julian-Borchak Williams, who picked up the image and held it next to his face. “No, this is not me,” Mr. Williams said. “You think all black men look alike?”

A Fake Account Was Using a Real Man’s Image | CNN | October 15, 2020

One of the suspended accounts belonged to a user named Gary Ray. “YES IM BLACK AND IM VOTING FOR TRUMP,” a screenshot reads. “Twitter has suspended 2 of my other accounts for supporting Trump. Can you please give me some retweets and a follow?” Gary Ray isn’t real, but the man in his profile picture is. His name is Robert Williams, and he didn’t know the account had used his photo. “I was shocked by it,” Williams said.

Coded Bias, a documentary streaming on Virtual Cinema, explores how these injustices arise from algorithmic programming disproportionately based on racial and gendered defaults. The film centers on charismatic Joy Buolamwini. A researcher at MIT’s Media Lab and a dynamic speaker, she is a crusader for algorithmic fairness, based on her understanding of how little most FRS technology is equipped to process the diversity of human society. Coded Bias also upholds progressive ideals by featuring an entirely female set of scientists, statisticians, policy advisers, and STEM reporters. (As a mother and teacher, I want all girls to see this movie, and for parents to recognize that it is as much a lesson in gender support as racial allyship.)

In this challenging year, a documentary critique of the destructive prejudices built into our technologies and social structures may not seem like an obvious movie choice to unwind with, but it is a credit to Boulamwini and her peers and collaborators that Coded Bias is uplifting, motivational viewing. Come for the consciousness-raising, but stay for the Joy (Buolamwini). Amidst all the current talk about allyship and change, Coded Bias shows us one very real, tangible, present space in which we can all demand actual, major change.

• Coded Bias / WATCH HERE Your ticket ($10) supports the MFAH and provides a 2-day pass to the film. SEE THE TRAILER

About the Author

Karen Fang, a member of the MFAH film subcommittee, chairs the Media and Moving Image Initiative at the University of Houston and is the author of Arresting Cinema: Surveillance in Hong Kong Film.

Underwriting for the Film Department is provided by Tenaris and the Vaughn Foundation. Generous funding is provided by Nina and Michael Zilkha; The Consulate General of the Republic of Korea; Franci Neely; Carrin Patman and Jim Derrick; Lynn S. Wyatt; ILEX Foundation; L’Alliance Française de Houston; and The Foundation for Independent Media Arts.